Jason Freitas and Joshua Huang

Mentor: Oleksii Mostovyi

Paper written by these students:

Representation of indifference prices on a finite probability space

Jason Freitas, Joshua Huang and Oleksii Mostovyi |

|

Vol. 18 (2025), No. 3, 495–516

|

Jason Freitas and Joshua Huang

Mentor: Oleksii Mostovyi

Paper written by these students:

Representation of indifference prices on a finite probability space

Jason Freitas, Joshua Huang and Oleksii Mostovyi |

|

Vol. 18 (2025), No. 3, 495–516

|

REU participants:

Bobita Atkins, Massachusetts College of Liberal Arts

Ashka Dalal, Rose-Hulman Institute of Technology

Natalie Dinin, California State University, Chico

Jonathan Kerby-White, Indiana University Bloomington

Tess McGuinness, University of Connecticut

Tonya Patricks, University of Central Florida

Genevieve Romanelli, Tufts University

Yiheng Su, Colby College

Mentors: Bernard Akwei, Rachel Bailey, Luke Rogers, Alexander Teplyaev

publication:

Bernard Akwei, Bobita Atkins, Rachel Bailey, Ashka Dalal, Natalie Dinin, Jonathan Kerby-White, Tess McGuinness, Tonya Patricks, Luke Rogers, Genevieve Romanelli, Yiheng Su, Alexander Teplyaev, Convergence, optimization and stability of singular eigenmaps, arXiv:2406.19510

Eigenmaps are important in analysis, geometry and machine learning, especially in nonlinear dimension reduction.

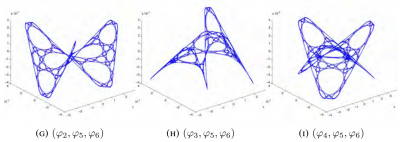

Versions of the Laplacian eigenmaps of Belkin and Niyogi are a widely used nonlinear dimension reduction technique in data analysis. Data points in a high dimensional space \(\mathbb{R}^N\) are treated as vertices of a graph, for example by taking edges between points separated by distance at most a threshold \(\epsilon\) or by joining each vertex to its \(k\) nearest neighbors. A small number \(D\) of eigenfunctions of the graph Laplacian are then taken as coordinates for the data, defining an eigenmap to \(\mathbb{R}^D\). This method was motivated by an intuitive argument suggesting that if the original data consisted of \(n\) sufficiently well-distributed points on a nice manifold \(M\) then the eigenmap would preserve geometric features of \(M\).

Several authors have developed rigorous results on the geometric properties of eigenmaps, using a number of different assumptions on the manner in which the points are distributed, as well as hypotheses involving, for example, the smoothness of the manifold and bounds on its curvature. Typically, they use the idea that under smoothness and curvature assumptions one can approximate the Laplace-Beltrami operator of \(M\) by an operator giving the difference of the function value and its average over balls of a sufficiently small size \(\epsilon\), and that this difference operator can be approximated by graph Laplacian operators provided that the \(n\) points are sufficiently well distributed.

In the present work we consider several model situations where eigen-coordinates can be computed analytically as well as numerically, including the intervals with uniform and weighted measures, square, torus, sphere, and the Sierpinski gasket. On these examples we investigate the connections between eigenmaps and orthogonal polynomials, how to determine the optimal value of \(\epsilon\) for a given \(n\) and prescribed point distribution, and the dependence and stability of the method when the choice of Laplacian is varied. These examples are intended to serve as model cases for later research on the corresponding problems for eigenmaps on weighted Riemannian manifolds, possibly with boundary, and on some metric measure spaces, including fractals.

Approximation of the eigenmaps of a Laplace operator depends crucially on the scaling parameter \(\epsilon\). If \(\epsilon\) is too small or too large, then the approximation is inaccurate or completely breaks down. However, an analytic expression for the optimal \(\epsilon\) is out of reach. In our work, we use some explicitly solvable models and Monte Carlo simulations to find the approximately optimal value of \(\epsilon\) that gives, on average, the most accurate approximation of the eigenmaps.

Our study is primarily inspired by the work of Belkin and Niyogi “Towards a theoretical foundation for Laplacian-based manifold methods.”

Talk: Laplacian Eigenmaps and Chebyshev Polynomials

Talk: A Numerical Investigation of Laplacian Eigenmaps

Talk: Analysis of Averaging Operators

Intro Text: Graph Laplacains, eigen-coordinates, Chebyshev polynomials, and Robin problems

Intro Text: A Numerical Investigation of Laplacian Eigenmaps

Intro Text: Comparing Laplacian with the Averaging Operator

Poster: Laplacian Eigenmaps and Orthogonal Polynomials

Results are presented at the 2023 Young Mathematicians Conference (YMC) at the Ohio State University, a premier annual conference for undergraduate research in mathematics, and at the 2024 Joint Mathematics Meetings (JMM) in San Francisco, the largest mathematics gathering in the world.